with Quantized Hand State

Shanghai Innovation Institute Carnegie Mellon University

Abstract. Dexterous robotic hands enable robots to perform complex manipulations that require fine-grained control and adaptability. Achieving such manipulation is challenging because the high degrees of freedom tightly couple hand and arm motions, making learning and control difficult. Successful dexterous manipulation relies not only on precise hand motions, but also on accurate spatial positioning of the arm and coordinated arm-hand dynamics. However, most existing visuomotor policies represent arm and hand actions in a single combined space, which often causes high-dimensional hand actions to dominate the coupled action space and compromise arm control. To address this, we propose DQ-RISE, which quantizes hand states to simplify hand motion prediction while preserving essential patterns, and applies a continuous relaxation that allows arm actions to diffuse jointly with these compact hand states. This design enables the policy to learn arm-hand coordination from data while preventing hand actions from overwhelming the action space. Experiments show that DQ-RISE achieves more balanced and efficient learning, paving the way toward structured and generalizable dexterous manipulation.

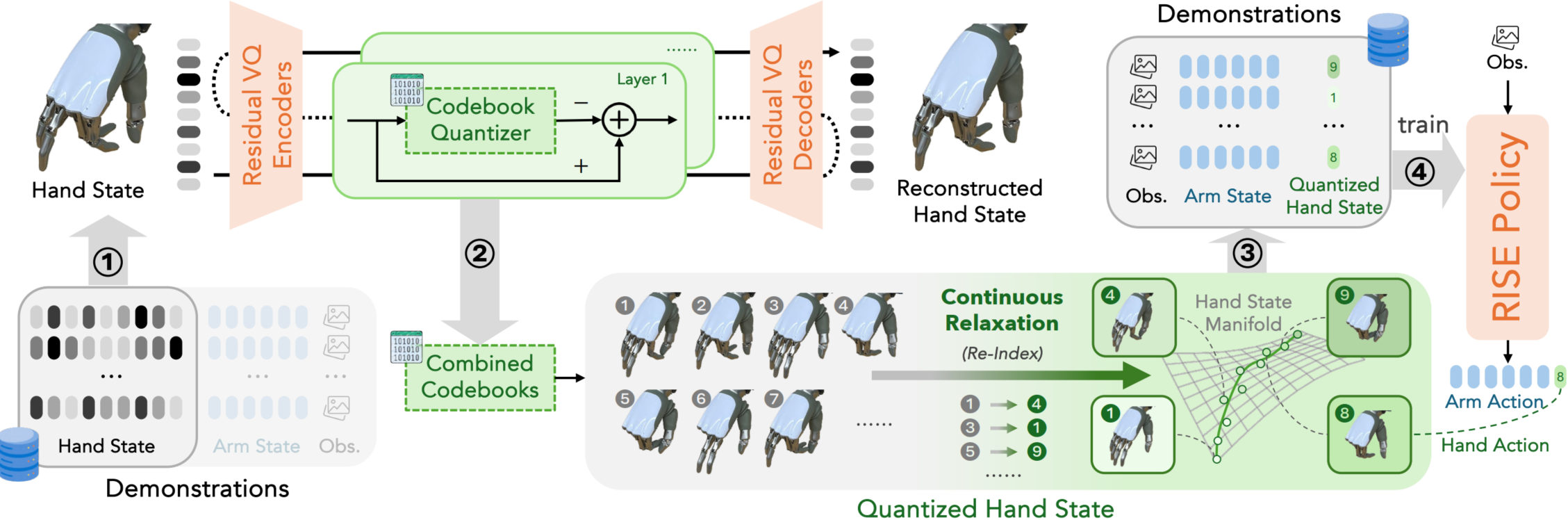

DQ-RISE Policy Architecture

Existing visuomotor policies often suffer from imbalanced learning, where high-dimensional hand actions dominate and arm localization is compromised. Naively separating arm and hand predictions, however, breaks coordination. We address this with DQ-RISE policy, which builds on the RISE policy and introduces Quantizing hand state into compact patterns via residual VQ-VAE. To preserve structure, we apply a continuous relaxation that orders the quantized states coherently. This compact representation allows precise arm control and smooth arm-hand coordination for dexterous manipulation.

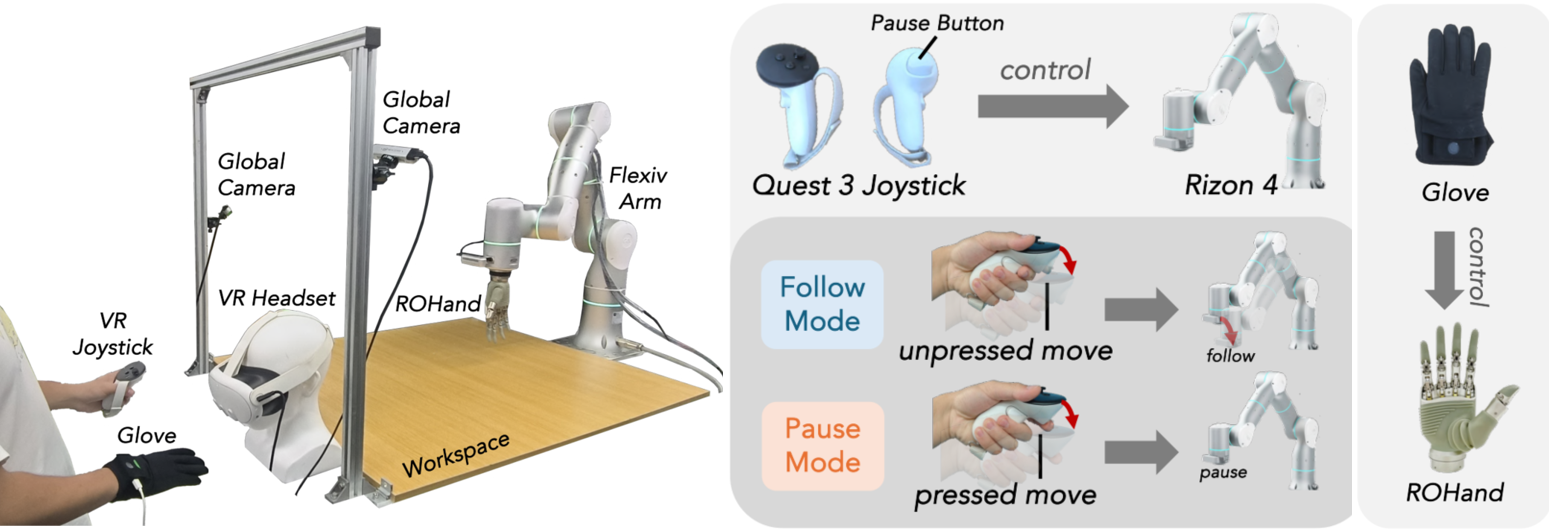

Robot Platform and Hybrid Dexterous Teleoperation System

Our platform consists of a Flexiv robotic arm equipped with an ROHand. During teleoperation, the arm is controlled via a VR joystick, where the joystick button can be used to pause arm motion and adjust the joystick pose for more intuitive and convenient operation. For hand control, we use a GForce glove to directly operate the ROHand using joint correspondence.

Experiments

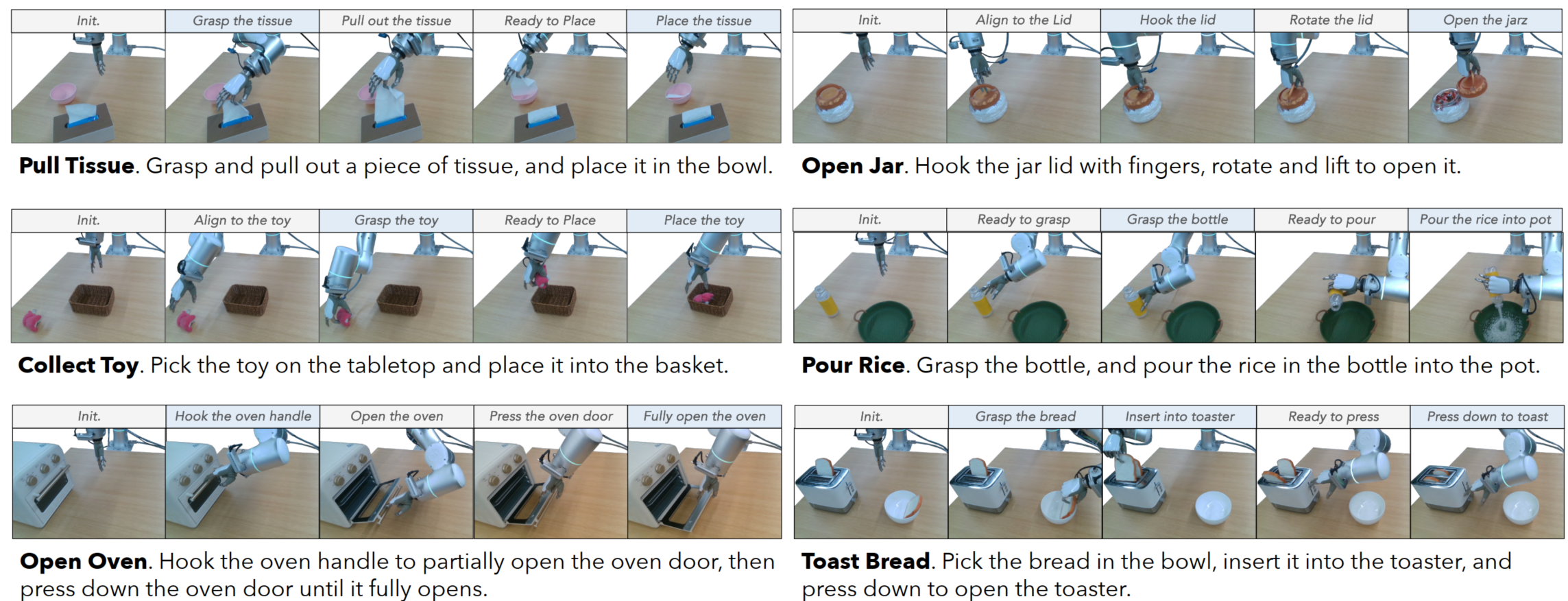

We design 6 tasks to evaluate the policies on dexterous manipulation in the real world, including pick-and-place operations, articulated object manipulation, tasks with significant rotations, and long-horizon tasks.

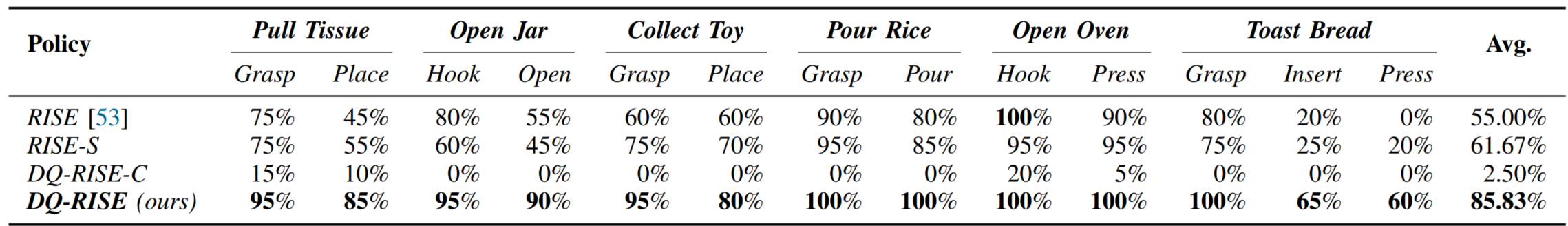

We compare our DQ-RISE policy against three baselines for integrating dexterous hand action prediction:

- The base visuomotor policy (RISE), which predicts concatenated arm-hand action chunks.

- The base visuomotor policy with separate diffusions (RISE-S), which uses two diffusion heads for arm and hand action generation.

- The base visuomotor policy with quantized hand action classification (DQ-RISE-C), which diffuses arm actions first and then classifies quantized hand actions using action features and the predicted arm action.

Experiments demonstrate that DQ-RISE consistently outperforms alternative prediction schemes, achieving high success rates across diverse tasks including long-horizon and contact-rich manipulations.

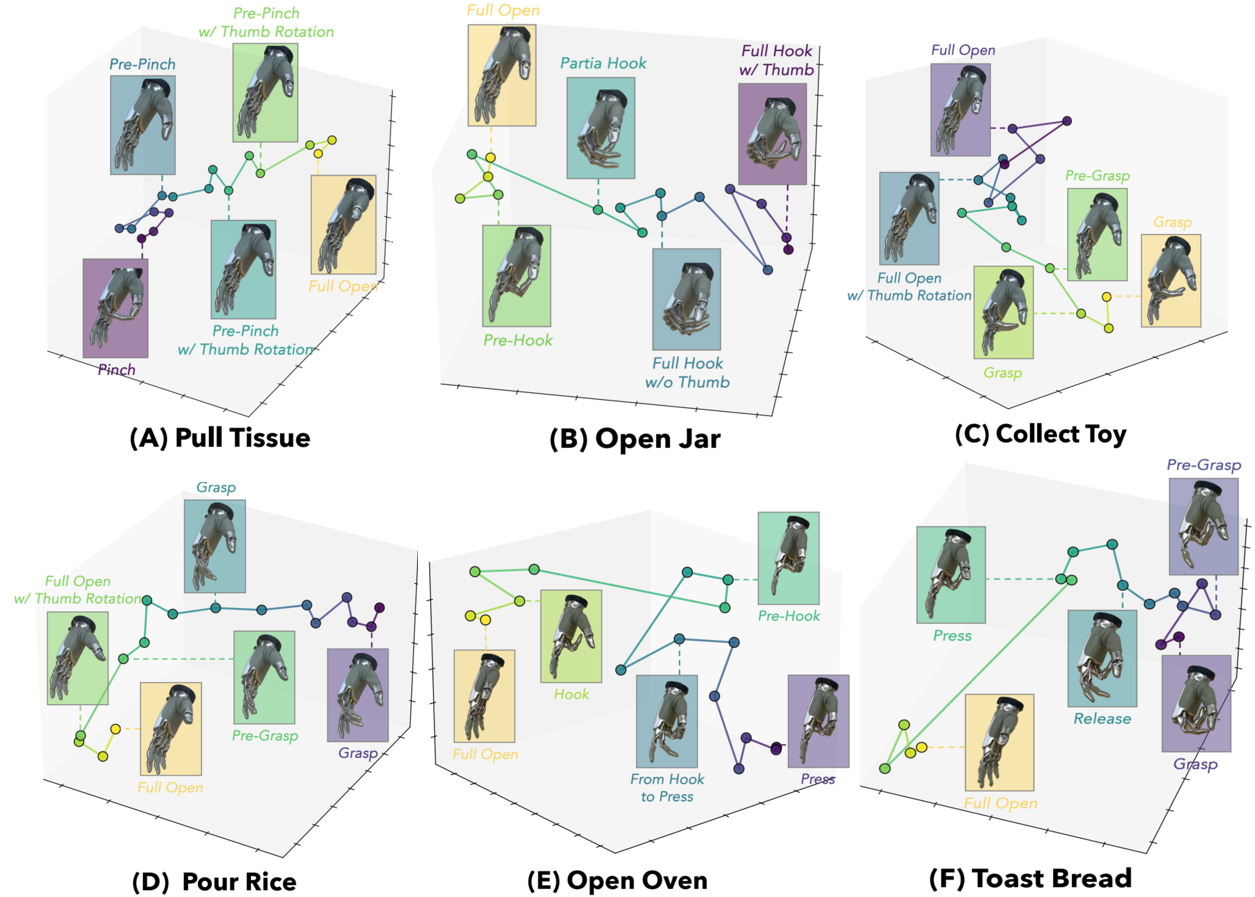

Quantized Hand State after Re-Indexing

Hand states are projected into 3D points via UMAP, with selected points annotated by their corresponding hand poses for reference. The figure illustrates that reindexing in the continuous relaxation process makes code transitions continuous and interpretable in the hand states, supporting further joint diffusing of arm & quantized hand actions.

Pull Tissue

Open Jar

Collect Toy

Pour Rice

Open Oven

Toast Bread

BibTeX

Website template: Allan Zhou

Modified from ALOHA @ Tony Z. Zhao